Over the past half-year, AI has been significantly transformed with the introduction of advanced Generative AI tools. This has not only impacted creativity and automation but has also revolutionized data quality and management. The rise of powerful models, especially Large Language Models (LLMs) such as ChatGPT, has created new opportunities for improving data accuracy, relevance, and connectivity across various industries. ChatGPT’s ability to simulate human-like conversations and decision-making has made it a milestone in AI development, drawing global attention and representing a major turning point in public AI acceptance. Achieving an unprecedented 100 million monthly active users within just two months, this tool signifies more than just a technological achievement; it heralds a new era in data processing and analysis.

The transformative power of AI in recent times hinges on the capabilities of Large Language Models (LLMs), which are pivotal both as generative AI and foundational models. These models have achieved two significant breakthroughs: mastering language complexities for generating contextually rich and intent-aware text and their design for extensive pre-training and subsequent fine-tuning, allowing for diverse applications that enhance data processing flexibility and utility.

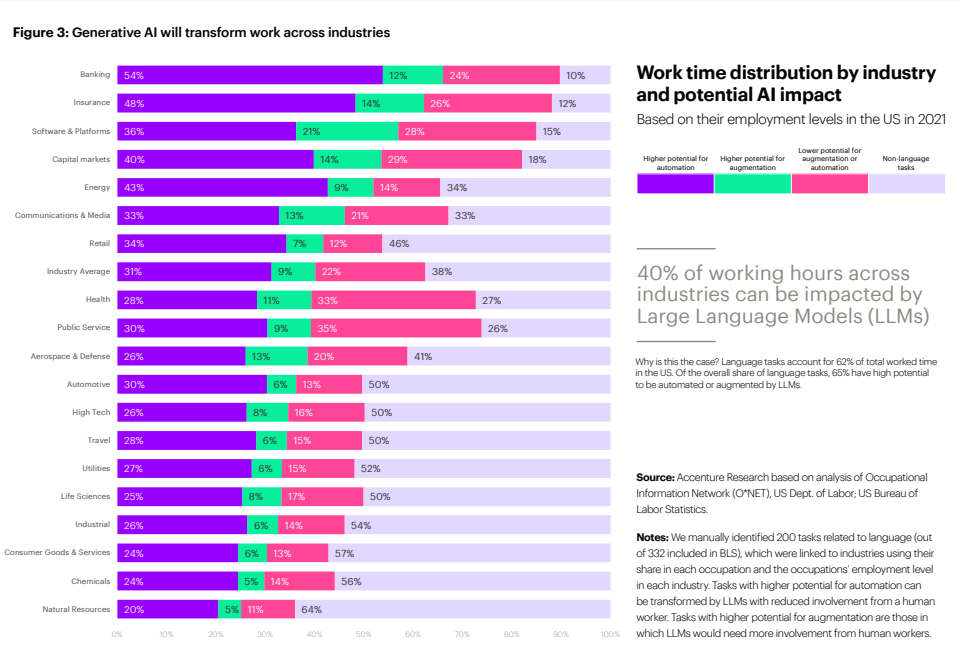

Business leaders are keenly aware of the potential of LLMs like GPT-4, with an estimated 40% of working hours in various industries poised for impact. This influence is particularly notable in improving data quality, where these models’ augmentation and automation features promise to significantly elevate productivity and creativity in data handling and analysis.

Moreover, an overwhelming 97% of top international business leaders are in complete agreement that AI foundation models are set to completely transform the incorporation and utilization of AI across various data formats. This unanimous viewpoint highlights an increasing recognition of the vital function that Generative AI serves in raising the standard of data, allowing for more precise, interconnected, and enlightening data analysis. As we explore this era further, the significance of AI in improving data quality and reshaping our approach to handling and understanding data becomes increasingly apparent, signifying a new phase in the evolution of AI and data science.

The rise of Generative AI

Generative AI has quickly become a driving force in the field of artificial intelligence, leading to significant changes in technology and setting new benchmarks for data quality and business creativity. Gartner predicts that by 2025, 30% of messages from major organizations will be generated by AI, underscoring the growing influence of these advanced models on communication and data interaction. Additionally, KPMG’s AI Risk Survey Report shows an 85% expectation for greater use of AI in predictive analytics, highlighting the increasing dependence on AI’s capacity to interpret and handle intricate data sets.

This paradigm shift isn’t just theoretical. The 2022 KPMG technology survey reveals that 50% of participants have experienced positive returns on their investment in AI technologies. This highlights the tangible advantages of improving data quality and operational efficiency in business. The growing use of AI tools, exemplified by the widespread adoption of ChatGPT, signifies a major step forward in technology integration and its capacity to transform data interaction and management.

The contrast between traditional AI and Generative AI is rooted in the diversity and intricacy of their outcomes. Generative AI opens up new possibilities for digital creativity and problem-solving by producing a wide range of complex results, including high-resolution images and contextually rich text. However, this versatility requires significant resources for creating and running models, making it more accessible to well-equipped institutions.

Source – Accenture

Source – Accenture

The role of Generative Artificial Intelligence in enhancing data quality

The transformative power of generative AI is reshaping the landscape of data quality, bringing about fundamental changes in data collection, management, analysis, and reporting. Its far-reaching impact delves deep into the core aspects of enhancing data quality, addressing questions about what, why, where, and how.

Data collection

Generative AI greatly enhances the speed and effectiveness of data collection, leading to more relevant and focused results. Tools such as Qualtrics and QuestionPro are incorporating AI to accelerate survey development by customizing surveys based on historical data. Additionally, the use of AI-driven chat-based surveys for data collection represents a significant shift in gathering data, providing more engaging and interactive methods. Furthermore, generative AI’s search and summarization abilities improve desk research, enabling more comprehensive information mining.

Data management

Automated data cleaning, powered by AI, streamlines the process of removing Personally Identifiable Information (PII) from data, ensuring efficiency and compliance with privacy regulations. AI is adept at ongoing data quality monitoring, which is particularly important in today’s environment of complex and abundant data streams. Additionally, AI plays a crucial role in efficiently transforming and classifying diverse data streams as the need for integration grows.

Data analytics

AI can process unstructured data and provide valuable insights and summaries without relying on pre-existing training datasets, enabling companies to extract crucial information from previously difficult-to-analyze sources at a larger scale. Furthermore, AI’s scalability in qualitative research allows for the efficient analysis of extensive volumes of customer feedback, delivering vital insights into consumer sentiment and preferences. As AI continues to advance, its ability to select and deploy analytical models promises to streamline decision-making processes and improve overall efficiency. The time has come for businesses to fully embrace AI’s potential in effectively managing unstructured data and effortlessly extracting actionable insights from diverse sources.

Data visualization and reporting

AI integration offers a crucial advantage by rapidly condensing intricate data, resulting in more effective reporting. This saves time and enables businesses to swiftly respond to emerging trends. Additionally, advanced visualization tools like Dall-E have enhanced data presentation, making reports more visually engaging and easier to comprehend. By leveraging AI-generated images and graphics, reporting becomes accessible to a wider audience, fostering urgency in understanding complex information.

Insight democratization through information synthesis

AI’s remarkable capacity to distill vast amounts of data into coherent and valuable insights is revolutionary, outstripping even the most advanced knowledge management systems. The democratization of insights: AI makes it possible for users at all levels to access and receive concise, pertinent information. A recent piece in the Harvard Business Review underscores the significant impact of generative AI on accelerating the pace, variety, and creativity of inquiries. According to the study, 79% of participants generated a larger number of questions when utilizing generative AI. This led to unique queries for their team, company, or sector 75% of the time.

Challenges and Limitations of Generative AI

While Generative AI has immense potential, understanding its limitations is crucial for realistic and effective implementation. Let’s delve into the various challenges organizations might encounter when adopting Generative AI.

Hallucination

What: Generative AI can produce high-confidence responses not grounded in training data, leading to fictional outputs.

Implications: While beneficial for creative purposes like art generation, this becomes problematic in practical applications like copywriting or code generation, where accuracy is crucial.

Biases

What: Generative AI inherits biases present in its training data.

Implications: This can lead to skewed, unfair, or unethical outcomes, especially in sensitive applications involving human interaction or decision-making.

Lack of human reasoning

What: Generative AI operates on statistical correlations rather than logical reasoning.

Implications: This limits its ability to understand context or make reasoned judgments, impacting applications that require complex decision-making or deep comprehension.

Limited context window

What: These models have a finite context window, limiting the size of input and output they can handle effectively.

Implications: This constrains the depth and scope of interactions or analyses, particularly in scenarios requiring extensive background information or detailed explanations.

Reliance on training data

What: Generative AI models depend heavily on their training data and cannot integrate additional sources or datasets without retraining.

Implications: This limits their adaptability and responsiveness to new or evolving information, restricting their application in dynamic environments.

Lack of source referencing

What: These models cannot provide references or sources for their output.

Implications: This makes it challenging to validate the accuracy or credibility of the generated content, especially in academic, research, or journalistic contexts.

Data synthesis challenges

What: Synthetic data generation may not accurately represent real-world outliers.

Implications: Critical for applications where outliers are significant, requiring additional steps to ensure a realistic data representation.

Enterprise adoption hurdles

What: Difficulty in gaining consensus among stakeholders for using synthetic data.

Implications: This reflects the challenge of integrating generative AI into existing business frameworks and practices, particularly when addressing data biases and ensuring compliance with industry standards.

Data maturity and gap assessment

What: Need for enterprises to assess their data and analytics programs before adopting synthetic data.

Implications: The lack of a thorough assessment can lead to ineffective generative AI integration, risking misalignment with business objectives and exacerbating existing data quality issues.

How can organizations leverage their data within these generative models?

Many organizations are eager to utilize their data in generative models to train them to respond to queries about proprietary information, which can be shared with internal teams or made accessible to customers. These efforts can be categorized into the following distinct categories:

Talent development and Expertise

Organizations must prioritize talent development and expertise cultivation to leverage their data effectively within generative AI models. Firstly, a focus on skills acquisition is crucial. This involves building or enhancing the team’s competencies in areas pivotal to generative AI, like data governance and model management. Developing these skills ensures that teams are proficient in managing the data and understand the nuances of how generative AI interacts with this data. Upskilling existing staff or recruiting new talent with a deep understanding of generative AI is key.

This foundational expertise is necessary for launching initiatives that deliver immediate value, ensuring that the organization’s use of generative AI is technically sound and strategically aligned with its broader objectives.

In addition to individual skills, forming a cross-disciplinary team is essential. Such a team, comprising business leaders, technologists, creative minds, and external experts, can think innovatively about potential use cases of generative AI. This diversity in perspective enables the identification of valuable applications and designs for AI deployments that are effective, compliant with relevant regulations, and mindful of cyber risks.

Furthermore, a comprehensive understanding of the underlying technologies of generative AI is imperative. Educating the workforce about the current capabilities, limitations, and risks associated with AI establishes a baseline of knowledge crucial for effective deployment. It is vital to keep up with technological advancements and understand their implications on business risks and opportunities. This ongoing education ensures that the organization remains agile and can adapt its strategies in response to evolving AI landscapes.

Strategy and Innovation

Business-driven mindset

Organizations need to take a business-focused approach supported by strategic change management to harness data’s power effectively in generative AI models. This involves two key priorities: creating new models and improving existing ones to deliver immediate benefits while also striving for a fundamental transformation of business processes in the long term. At the core of this approach is cultivating a culture that encourages AI experimentation, which is essential for achieving early wins and promoting wider adoption. The experimentation should have two main components: one aimed at achieving quick successes through easily applicable models for rapid results, and the other focused on ambitious goals such as rethinking business operations, customer interaction, and product or service innovation using customized models based on the organization’s data.

As organizations explore and navigate these opportunities for transformation, they acquire tangible value and deeper insights into which AI applications are most suitable for different scenarios, recognizing that the level of investment and complexity varies. This method also enables them to rigorously test and improve their strategies related to data privacy, model accuracy, bias, and fairness, as well as to understand when to implement safeguards involving human oversight. With 98% of global executives acknowledging the significance of AI foundational models in their future strategies, embracing this business-oriented and change-driven approach is crucial for companies seeking to integrate generative AI effectively and responsibly.

Prompt tuning

Prompt tuning, such as the use of tools like Bing chat, is a significant advancement in how AI systems improve search and response capabilities. This method involves actively searching the internet for relevant information, which is then used to create responses that are naturally phrased and enriched with references to the gathered data. Known as retrieval-augmented generation, this process effectively combines traditional search engines with advanced AI language models. The increasing popularity of prompt tuning is largely due to its easy implementation and lower investment and expertise required compared to more complex AI applications. This democratizes access to advanced AI functionalities, allowing a wider range of businesses to benefit from these technologies without extensive in-house AI expertise or resources. Companies like Microsoft and OpenAI are leading this movement, working to make such technologies more user-friendly and accessible. The effectiveness of prompt tuning lies in its ability to provide more contextually relevant and comprehensive responses by leveraging real-time internet data for up-to-date information and diverse perspectives when necessary.

Data governance and Quality assurance

Incorporating data governance

Organizations must guarantee that their data is accurate, up-to-date, and of high quality, especially as generative AI models operate with minimal human supervision. Establishing a comprehensive data governance program that encompasses data quality, adherence to regulations, and privacy is vital. Regular data quality assessments are essential to identify unreliable or outdated inputs that could negatively impact the model’s results. It is also important to address ethical concerns, bias in training, and legal aspects of data privacy and regulatory compliance. This ensures that the data used in generative AI models is of top-notch quality, ethically obtained, and compliant with applicable laws.

Addressing data output

Dealing with the results produced by generative AI, such as synthetic data, demands thoughtful attention within the company’s governance structure. It is crucial to set up clear rules governing these outputs, especially if they include sensitive, proprietary, or confidential information. Creating ownership chains and protocols for managing AI-generated results is essential. Choices must be made about how this data fits into the current corporate system, whether stored in databases or reintegrated into models for additional training. Safeguarding and using this generated data correctly are key to preserving data integrity and security.

Preparing proprietary data

Access to industry-specific organizational data is a must for tailoring foundation models. Developing a strategic data architecture is essential for organizations, encompassing the acquisition, expansion, enhancement, and establishment of a modern enterprise data platform, ideally on cloud infrastructure. This platform guarantees widespread access to and usability of data throughout the organization, eliminating isolated storage. Analyzing business data in a unified way or using distributed computing methods such as data mesh improves the capacity to effectively utilize this data in generative AI models.

Technological infrastructure and Resources

Integration with current infrastructure

In order to effectively implement Generative AI, organizations must develop a strategy that integrates with their current AI frameworks. This involves securing access to high-quality enterprise data, establishing AI governance, and adapting processes to maximize the potential of cognitive workers. Due to the rapid pace of technological advancement, collaboration with external partners and third-party organizations is essential. This ensures that Generative AI initiatives align with broader AI strategies and allows access to valuable external expertise and insights needed to navigate this dynamic field.

Training and tuning existing models

Developing a new advanced language generation model is a monumental task that requires substantial computational power, financial commitment, and extensive data. Companies like Google, Meta, and Amazon, with the necessary infrastructure, are the main players in this field. For most organizations, this path is less traveled due to the immense resources and expertise needed. This underscores the significance of having a strong technological foundation supporting the development of sophisticated AI models.

Many organizations find it more practical to refine and adapt current generative AI models rather than create new ones. Platforms such as OpenAI provide the chance to train basic models using their data and adjust the responses for specific needs. Utilizing APIs or improving existing models with private data is a cost-effective way to take advantage of generative AI. This approach saves resources by avoiding the need for extensive infrastructure required to build a model from the ground up. It enables organizations to customize AI capabilities without the significant investment in technology and data needed for new model development.

Ethical AI and Compliance

Establishing a responsible framework led by top management

This model takes a top-down approach, beginning with the CEO and cascading through all levels of the company to embed ethical AI principles into every aspect of AI use. A crucial aspect is educating and raising awareness among team members to ensure they understand AI’s ethical implications and responsibilities. Implementing preventive measures is essential to proactively manage risks from the early stages of AI development, particularly when training models with sensitive data. This proactive approach should be integrated into all business processes involving AI to ensure that ethical considerations are continually part of AI implementation. Establishing a robust governance structure to oversee AI use cases is crucial, aligning AI applications with organizational values, policies, and regulatory requirements for consistent adherence to ethical standards and legal compliance.

Technology and cultural integration for responsible AI

Effective oversight and transparency in AI operations rely on the integration of AI monitoring tools and human-in-the-loop systems. Embedding ethical principles into the development lifecycle and promoting a culture of continuous learning is crucial for responsible AI use. As AI continues to advance, organizations need to adjust their ethical frameworks to tackle emerging challenges and uphold ethical standards in AI utilization.

Conclusion

To harness the full potential of AI technology, it’s crucial to fully comprehend the opportunities, implications, and risks involved. Research generative AI and explore its functionalities, including large language models’ training processes, strengths, and limitations. Gain a deep understanding of your organization’s data ownership, freshness, and quality metrics for each dataset. Identify datasets suitable for integration into a generative model. Then, pinpoint potential applications for this technology within your organization. Define objectives and the value proposition of generating creative output to benefit your staff and customers. Begin with small-scale implementations before expanding to larger projects to improve the model and understand the implications of introducing AI technology into your organization. Lastly, promote data literacy throughout the organization regarding generative AI to ensure everyone understands its reliance on trained data to generate output. This broader understanding will empower individuals to effectively utilize this technology through informed decision-making.